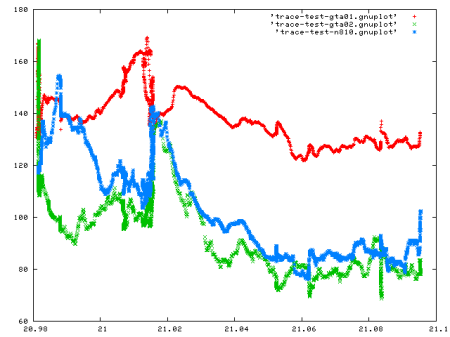

I started poking at the SMedia Glamo chip in the GTA02 this week. First I played with the Linux framebuffer driver and later with decoding MPEG in hardware, and now I have some code ready. I was challenged by messages like this on the Openmoko lists. Contrary to the opinion spreading accross these messages, we’re not doomed and we still have a graphics accelerator in a phone (which is coolness on its own). And it’s a quite hackable one.

I first had a look at libglamo code – a small library written some time ago by Chia-I Wu (olv) and Harald Welte (laf0rge) for accessing some of the Glamo’s submodules (engines). I asked the authors if I could use their code and release it under GPL and they liked the idea, so I stitched together libglamo and mplayer and added the necessary glue drivers. This wasn’t all straight forward because mplayer isn’t really prepared for doing decoding in hardware, even though some support was present. Today I uploaded my mplayer git tree here – see below what it can and cannot do. There’s lots more that can be improved but the basic stuff is there and seems to work. To clone, do this:

cg-clone git://repo.or.cz/mplayer/glamo.git

The Glamo fact sheet claims it can do MPEG-4 and H-263 encoding/decoding at 352×288, 30fps max and 640×480 at 12fps max. Since it also does all the scaling/rotation in hardware, I hoped I would be able to play a 352×288 video scaled to 640×480 at full frame-rate but this doesn’t seem to be the case. The decoding is pretty fast but the scaling takes a while and rotation adds another bit of overhead. That said, even if mplayer is not keeping up with the video’s frame-rate it still shows 0.0% CPU usage in top. There are still many obvious optimisations that can be done (and some less obvious that I don’t know about not being much into graphics). Usage considerations:

- Pass “-vo glamo” to use the glamo driver. The driver should probably be a VIDIX subdriver in mplayer’s source but that would take much more work as VIDIX is very incomplete now, so glamo is a separate output driver (in particular vidix seems to support only “BES” (backend scaler?) type of hw acceleration, which the Glamo also does, but it does much more too). Like vidix, it requires root access to run (we should move the driver to the kernel once there exists a kernel API for video decoders – or maybe to X).

- It only supports MPEG-4 videos, so you should recode if you want to watch something on the phone without using much CPU. H-263 would probably only require some trivial changes in the code. For completeness – MPEG-4 is not backwards compatible with MPEG1 or 2, it’s a separate codec. It’s the one used by most digital cameras and it can be converted to/from with Fabrice Bellard’s ffmpeg. A deblocking filter is supported by the Glamo but the driver doesn’t yet support it. For other codecs, “-vo glamo” will try to help in converting the decoded frames from YUV to RGB (untested), which is normally the last step of decoding.

- The “glamo” driver can take various parameters. Add “:rotate=90” to rotate (or 180 or 270) – the MPEG engine doesn’t know about the xrandr rotation and they won’t work together. Add “:nosleep” to avoid sleeping in mplayer – this yields slightly better FPS but takes up all your CPU, spinning.

- Supports the “xover” output driver, pass “-vo xover:glamo” to use that (not very useful with a window manager that makes all windows full-screen anyway).

- Only works with the 2.6.22.5 Openmoko kernels. There were some changes in openmoko 2.6.24 patches that disabled access to the MPEG engine but since we don’t have a bisectable git tree I can’t be bothered. UPDATE: A 2.6.24 patch here – note that it can eat your files, no responsibility assumed. I guess it can also be accounted for in mplayer, will check. My rant about lack of changes history in git is still valid – while I loved the switch to git, the SVN was being maintained better in this regard.

- In the mplayer git tree linked above I enabled anonymous unmoderated push access so improvements are welcome and easy to get in.

With respect to the linux framebuffer poking, I wanted to see how much of the text console rendering can be moved to the hardware side and it seems the hw is not lacking anything (scrolling, filling rectagles, cursor) compared to the other accelerated video cards, and even the code already exists in Dodji Seketeli’s Xglamo. I’m sure sooner or later we’ll have it implemented in the kernel too. For now I got the framebuffer to use hardware cursor drawing (alas still with issues).

Brazil cities reminded me a lot of Peru, which was the only place I had seen in America (this comparison must seem awfully ignorant to anyone who lives in some place between Brazil and Peru). We spent one week in Ceará region seeking out best places for paragliding. One of the spots was the launch pad near Nossa Senhora Imaculada

Brazil cities reminded me a lot of Peru, which was the only place I had seen in America (this comparison must seem awfully ignorant to anyone who lives in some place between Brazil and Peru). We spent one week in Ceará region seeking out best places for paragliding. One of the spots was the launch pad near Nossa Senhora Imaculada