Last week I charged the different batteries and took a GTA01 Neo, a GTA02 Neo and a Nokia N810 with me to enable their GPSes on my way home from school. Then I saved the traces they logged and loaded into JOSM to have a look (GTA01, GTA02, N810 – gpx files converted using gpsbabel from nmea)

The devices made respectively 11.28km, 12.12km and 11.07km routes (sitting in the same bag the whole time).

All in all I like the GTA01 accuracy the most although all three sometimes have horrible errors. They all three have accuracy about near the bottom line of usability for OSM mapping for a city, so if you get a GPS with that in mind, it may be slightly disappointing. All three are quite good at keeping the fix while indoor but everytime there’s not enough real input available they will invent their own rather than admit (if you had physics experiments at high-school and had to prove theories that way, you know how this works), resulting in run-offs to alternative realities – especially the N810 likes to make virtual trips. They all three apparently do advanced extrapolation and most of the time get things right, but the GTA01 GPS (the hammerhead) very notably assumes in all the calculations that the vehicle in which you move has a certain inertia and treats tight turns as errors. I’m on a bike most of the time and can turn very quickly and it feels as if the firmware was made for a car (SpeedEvil thinks rather a supertanker).

It’s suprising how well they all three can determine the direction in which they’re pointing even when not moving (the GTAs more so). The firmwares seem to rely on that more than on the actual position data sometimes. This results in a funny effect that the errors they make are very consistent even if very big – once the GPS thinks it’s on the other side of a river from you (or worse in the middle), it will stay there as long as you keep going along the river.

I’m curious to see what improvement the galileo system brings over GPS.

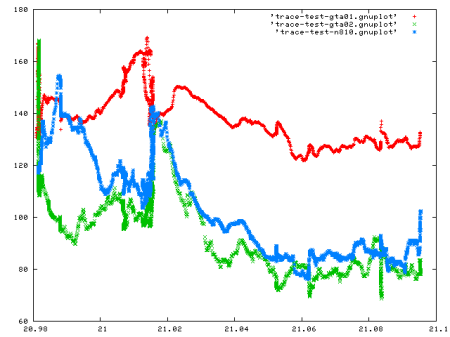

UPDATE: I was curious about the precision with which the altitude is reported, which can’t be seen in JOSM. First I found that the $GPGGA sentences on my GTA01 have always 000.0 in the elevation field, but the field before it (normally containing HDOP) has a value that kind of makes sense as an altitude, so I swapped the two fields (HDOP value should be < 20.0 I believe?). Then I loaded the data into gnuplot to generate this chart:

The horizontal axis has longitude and vertical the elevation in metres above mean sea level. Err, sure? I might have screwed something up but I checked everything twice. Except the GTA01 which might be a different value completely – but the is some correlation. I’m not sure which one to trust now.

Brazil cities reminded me a lot of Peru, which was the only place I had seen in America (this comparison must seem awfully ignorant to anyone who lives in some place between Brazil and Peru). We spent one week in Ceará region seeking out best places for paragliding. One of the spots was the launch pad near Nossa Senhora Imaculada

Brazil cities reminded me a lot of Peru, which was the only place I had seen in America (this comparison must seem awfully ignorant to anyone who lives in some place between Brazil and Peru). We spent one week in Ceará region seeking out best places for paragliding. One of the spots was the launch pad near Nossa Senhora Imaculada